In this Laravel tutorial titled “Laravel 12 import very large csv into database with seeder”, you will learn how to import large csv files step by step guide with practical e.

Laravel 12 handles very large CSV imports efficiently using LazyCollection for memory-safe streaming, chunking, and bulk inserts in seeders. This approach prevents timeouts and high memory usage during database population from massive files like 1GB or larger.

In this article, we’ll walk through a production-safe approach to importing a very large CSV file into a MySQL database using Laravel 12, Seeder, and plain PHP—without relying on external libraries like Laravel Excel.

Table of Contents

Why Use Seeders for Large Imports

Seeders provide a structured, repeatable way to populate databases with initial or bulk data in Laravel. For very large CSVs, direct loading risks exhausting PHP memory limits and causing execution timeouts. LazyCollection streams rows lazily without loading the entire file into memory, combined with chunking (e.g., 1000 rows per batch) and optimized inserts.

Step 1: Create Laravel Project

In this step, we will create a fresh Laravel project. If you already have an existing Laravel installation, you can safely skip this step.

Open your terminal and run the following command to create a new Laravel project:

composer create-project laravel/laravel csv-import

Step 2: Create Migration

In this step, we will create a migration for the posts table.

Run the following command to generate the migration file:

php artisan make:migration create_posts_table

Next, open the generated migration file located at: database/migrations/xxxx_xx_xx_create_posts_table.php , Update the file with the required table structure.

<?php

use Illuminate\Database\Migrations\Migration;

use Illuminate\Database\Schema\Blueprint;

use Illuminate\Support\Facades\Schema;

return new class extends Migration

{

/**

* Run the migrations.

*/

public function up(): void

{

Schema::create('posts', function (Blueprint $table) {

$table->id();

$table->string('title');

$table->text('description')->nullable();

$table->string('author')->nullable();

$table->string('slug')->unique();

$table->string('status')->default('draft'); // draft, published

$table->timestamp('published_at')->nullable();

$table->timestamps();

});

}

/**

* Reverse the migrations.

*/

public function down(): void

{

Schema::dropIfExists('posts');

}

};

After updating the migration, run the following command to create the posts table in the database:

php artisan migrate

Step 3: Create Seeder

In this step, we will create a database seeder to insert records into the posts table.

Run the command below to generate the seeder:

php artisan make:seeder PostSeeder

Now open the seeder file located at: database/seeders/PostSeeder.php, Update the file with the CSV import logic to insert records into the database.

<?php

namespace Database\Seeders;

use Illuminate\Database\Console\Seeds\WithoutModelEvents;

use Illuminate\Database\Seeder;

use Illuminate\Support\Facades\DB;

use Illuminate\Support\LazyCollection;

class PostSeeder extends Seeder

{

/**

* Run the database seeds.

*/

public function run(): void

{

DB::disableQueryLog(); // Important for large imports

DB::table('posts')->truncate();

LazyCollection::make(function () {

$filePath = public_path("uploads/posts.csv");

if (!file_exists($filePath)) {

$this->command->error("CSV file not found at: {$filePath}");

return;

}

$handle = fopen($filePath, 'r');

if ($handle === false) {

$this->command->error("Unable to open CSV file.");

return;

}

while (($line = fgetcsv($handle, 4096)) !== false) {

$dataString = implode(", ", $line);

$row = explode(',', $dataString);

yield $row;

}

fclose($handle);

})

->skip(1)

->chunk(500)

->each(function (LazyCollection $chunk) {

$records = $chunk->map(function ($row) {

return [

'title' => $row[1],

'description' => $row[2],

'author' => $row[3],

'slug' => $row[4],

'status' => $row[5] ?? 'draft',

'published_at' => $row[6] ?? null,

'created_at' => now(),

'updated_at' => now(),

];

})->toArray();

DB::table('posts')->insert($records);

});

}

}

Explanation

- Disables query logging avoids storing queries in memory during the import to prevent memory issues during large imports.

- Truncates the posts table (deletes all existing records).

- Uses LazyCollection to efficiently process large CSV files without loading everything into memory.

- Loads data from public/uploads/posts.csv.

- Checks if the CSV file exists and is readable; otherwise shows an error.

- Reads the CSV row by row using fgetcsv().

- By reading with fgetcsv() inside a loop, memory stays low. The “classic” mistake is using file() or file_get_contents() to load all lines into an array – avoid that for large files

- Skips the first row (header row).

- Processes data in chunks of 500 rows for better performance, it much faster than 500 separate inserts.

- Maps each CSV row to database fields (title, description, author, etc.).

- Sets default values (e.g., status = ‘draft’).

- Adds created_at and updated_at timestamps, because you’re using DB::table()-insert() (Query Builder), not an Eloquent model, Laravel does not auto-populate created_at and updated_at, so they stay NULL unless set manually.

- Bulk inserts each chunk into the posts table.

This seeder efficiently imports a large CSV file into the posts table using chunking and lazy loading.

Performance Tips

💡 Tip: Adjust the chunk size in your seeder based on your server’s memory and performance capabilities to achieve optimal results.

Increase memory_limit and max_execution_time in php.ini for extreme cases, but prioritize chunking.

Step 4: Create Controller

In this step, we will create a PostController to handle the CSV file upload. This controller will be responsible for receiving the CSV file and triggering the import process.

Generate the controller using the following command:

php artisan make:controller PostController

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

class PostController extends Controller

{

public function index(){

return view('upload');

}

/**

* upload CSV

*/

public function upload(Request $request)

{

$request->validate([

'file' => 'required|mimes:csv|max:102400', // 100MB

]);

$file = $request->file('file');

$fileName = 'posts.csv';

// Move file to public/uploads

$file->move(public_path('uploads'), $fileName);

return back()->with('success', 'CSV file uploaded successfully!')

->with('file', $fileName);

}

}

Explanation

- Displays upload form (index method)

- Validates CSV file and size (max 100MB)

- Saves file as posts.csv in public/uploads

- Returns success message after upload

Read Also : How to Import Large Excel and CSV Files in Laravel 12 Complete Guide

Step 5: Create Blade File to Upload CSV

In this step, we will create a Blade view to upload the CSV file. Create the following file: resources/views/upload.blade.php

<!DOCTYPE html>

<html>

<head>

<title>Laravel 12 Import Very Large CSV into Database With Seeder</title>

<link href="https://cdn.jsdelivr.net/npm/bootstrap@5.3.8/dist/css/bootstrap.min.css" rel="stylesheet">

</head>

<body>

<div class="container mt-5">

<div class="card">

<h5 class="card-header">Laravel 12 Import Very Large CSV into Database With Seeder - ItStuffSolutiotions</h5>

<div class="card-body">

<!-- Success/Error Messages -->

@if(session('success'))

<div class="alert alert-success">{{ session('success') }}</div>

@endif

@if(session('error'))

<div class="alert alert-danger">{{ session('error') }}</div>

@endif

@if($errors->any())

<div class="alert alert-danger">

<ul class="mb-0">

@foreach($errors->all() as $error)

<li>{{ $error }}</li>

@endforeach

</ul>

</div>

@endif

<!-- Upload Form -->

<form action="{{ route('posts.upload') }}" method="POST" enctype="multipart/form-data" class="mb-4 border p-4 rounded bg-light">

@csrf

<div class="row align-items-end">

<div class="col-md-5">

<label class="form-label fw-bold">Select File (CSV)</label>

<input type="file" name="file" class="form-control" required>

</div>

<div class="col-md-3">

<button type="submit" class="btn btn-primary w-100">Upload</button>

</div>

</div>

</form>

</div>

</div>

<script src="https://cdn.jsdelivr.net/npm/bootstrap@5.3.8/dist/js/bootstrap.bundle.min.js"></script>

</body>

</html>

This view will contain a simple form that allows users to upload a CSV file, which will be stored in the public directory for processing.

Step 6: Create Routes

In this step, we will define routes to handle the CSV file upload request.

Open the routes/web.php file and add a route that points to the PostController method responsible for uploading and processing the CSV file.

<?php

use Illuminate\Support\Facades\Route;

use App\Http\Controllers\PostController;

Route::controller(PostController::class)->group(function(){

Route::get('upload', 'index')->name('posts.index');

Route::post('upload', 'upload')->name('posts.upload');

});

Read Also : Laravel 12 Import Large CSV File Using Queue Step-by-Step Guide

Step 7: Run the Seeder

In this step, we will first upload the CSV file that we want to import.

Start the Laravel development server by running:

php artisan serve

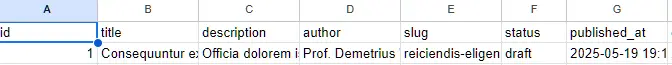

Next, open your browser and navigate to: http://127.0.0.1:8000/upload Upload the CSV file at this URL. CSV Format:

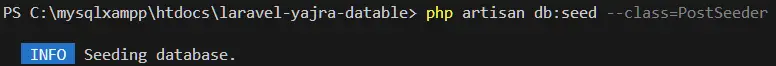

After the upload is complete, run the seeder to begin importing the data into the posts table. Execute the following command:

php artisan db:seed --class=PostSeeder

Once the process finishes, verify that the records have been successfully inserted into the database.

Conclusion

Importing very large CSV files into Laravel 12 databases via seeders combines streaming with LazyCollection, chunked processing, and bulk database inserts for optimal memory efficiency and speed. This method scales to millions of rows without crashing servers, making it ideal for production seeding or data migrations. Developers can adapt the example for custom validation, relationships, or queues to handle enterprise-scale imports reliably.

Common Pitfalls

Avoid these frequent issues during large CSV imports to prevent failures and performance bottlenecks.

- Incorrect CSV Parsing: Using explode() on raw lines fails with commas in quoted fields; always use fgetcsv() for proper handling.

- Memory Overload: Skipping chunking loads entire files into RAM—stick to 500-2000 row chunks based on row size.

- Foreign Key Constraints: Bulk inserts fail if referenced tables lack data; disable checks temporarily with DB::statement(‘SET FOREIGN_KEY_CHECKS=0;’).

- No Transaction Rollback: Wrap chunks in DB::transaction() to rollback partial failures instead of partial data commits.

FAQ

Q1: How large a CSV can this handle?

Easily 1GB+ files with proper chunking; tested up to 10M rows on standard servers by streaming rows without full load.

Q2: Should I use Laravel Excel package instead?

For simple cases yes, but native fgetcsv() + LazyCollection outperforms for raw speed on massive unformatted CSVs without package overhead.

Q3: Why should I avoid Laravel Excel for very large CSV files?

Laravel Excel is great for small-to-medium files but:

1. Loads more data into memory

2. Adds overhead for 1GB+ files

3. Can cause memory exhaustion

Plain PHP CSV handling is faster and more memory-efficient.

Q4: Why do we disable query logging during imports?

Laravel stores executed queries in memory by default. For large imports, this can consume excessive RAM, slow down execution.